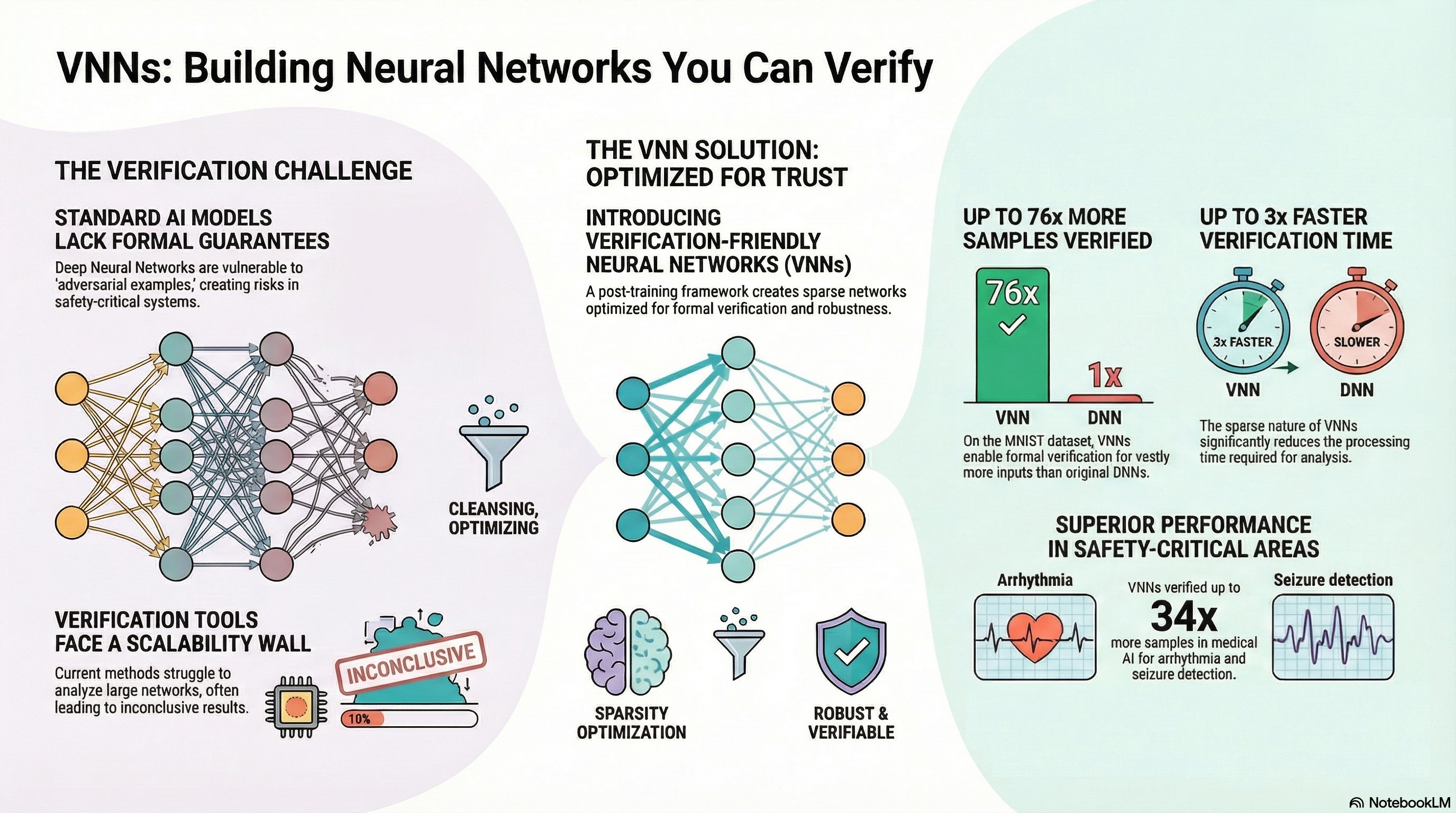

VNN: Verification-Friendly Neural Networks with Hard Robustness Guarantees

Presented at The International Conference on Machine Learning (ICML) 2024

Formal verification of neural networks often struggles to scale, limiting practical robustness guarantees. This work introduces a post-training approach that transforms existing models into verification-friendly networks without sacrificing accuracy. As a result, robustness can be established faster and for significantly more inputs.