Guest PhD Researcher at EPFL

As a Guest PhD Student at the Swiss Federal Institute of Technology (EPFL) in 2024, I conducted research under the supervision of Prof. Pascal Frossard . My work focused on task arithmetic for editing pre-trained Vision Transformers in multi-task learning settings, with an emphasis on model knowledge management and performance optimization. The research applied transformer-based techniques and used cloud computing resources to support scalable experimentation.

Collaboration with Google DeepMind

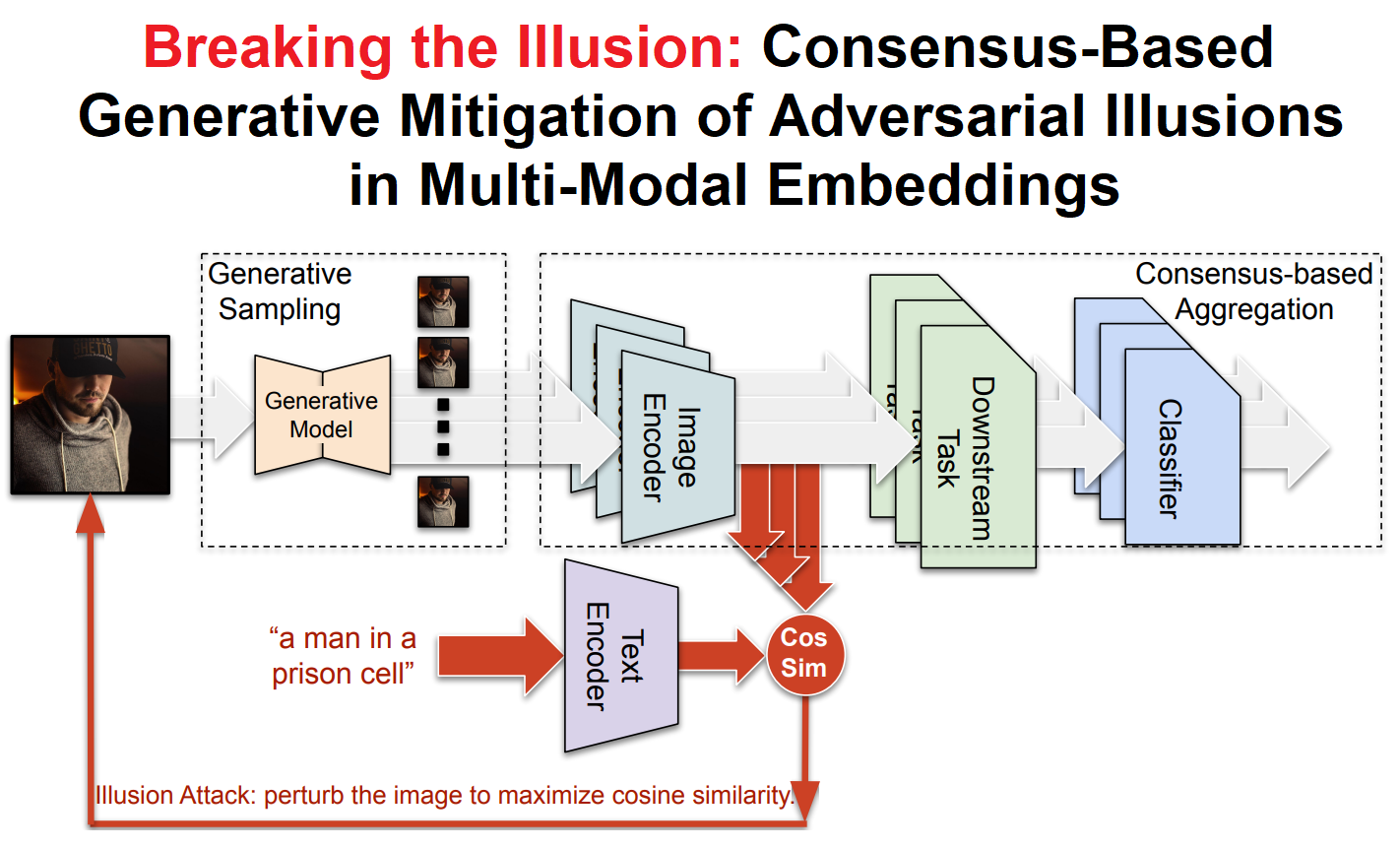

As part of the Google Research Scholar Program award in 2025 awarded to my supervisor, Assoc. Prof. Amir Aminifar, I contributed to the FiT-LLM: Efficient Fine-Tuning of Large Language Models project in collaboration with Google DeepMind. My colleagues and I are investigating adversarial illusion attacks in multimodal models, with a focus on mitigating these attacks by developing robust machine learning systems that move beyond standard accuracy metrics to improve safety and real-world reliability.